Blog

A random collection of thoughts on a variety of topics

(27 Posts)

“Bloom Filters in the wild”

2020-10-15

At my job, it's not too often I have a use for "exotic" data structures. Most of the time, a simple HashMap, Set, or List (vector) will do the job. However recently we had a situation come up where we got to apply a Bloom Filter to solve our problem. If your unfamiliar with Bloom Filters, they are a space optimized, probabilistic set data structure. They have a neat property where they can tell you that something is probably in a set, or definitely not in a set. So basically, they are a great if you don't care about false positives, but must always have true negatives. They are also a good choice if you have limited space, or want to know exactly how much space your using because they don't grow or shrink no matter how much data you "store" in them. However, the downside is that the more data you store, more more likely a false positive will occur. In our case, we could not store the full data set in memory, so a Bloom Filter was a perfect fit.

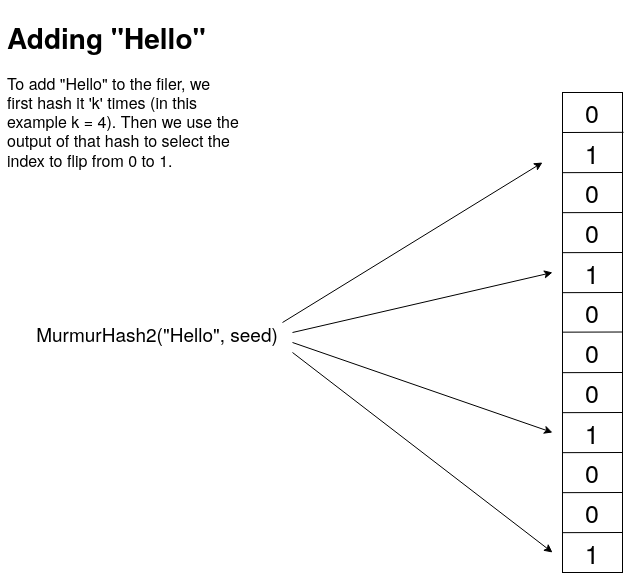

Bloom filters are a very simple data structure. They are simple an array of bits (bitmap). These bits get set as you add data to the filter. When you add new data to the filter, the data is run through a hash function, and that hash function is used to select the index of the bit to flip to true. Normally you use a few hashes, or different parts of the same hash to set a few bits per piece of data. Ideally, your hash produces values in a nice uniform distribution. Lets take a look at an example.

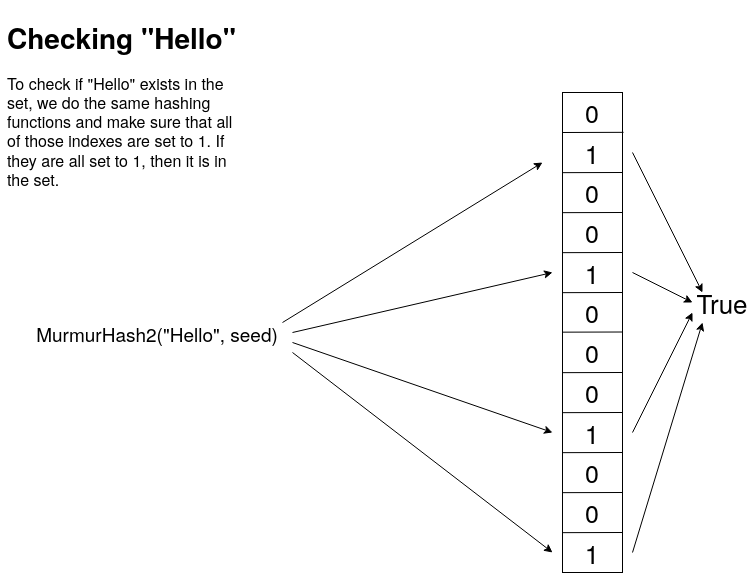

In this example, I use the Murmur2 hash 4 times with 4 different seed values. The output of the Murmur2 hash is a 32 bit unsigned number. I can then take this number and use the modulus to get an index. Now if I wanted to check if an item was in the set, I would just do the exact same hashing and get the same indexes, and then verify that those indexes are all 1's. If there are any 0's then this item was definitely never added to the set.

Now, it should be clear why this data structure has false positives. It's because you only have a limited set of hashes to map values into, so there can be collisions. Lets say for example that I have added "Hello" into the filter, and it happens to map to bits 1, 2, 3 and 4. Later I check if the word "Boston" is in the set, and it also happens to map to bits 1, 2, 3 and 4. Now I have a false positive. However, the larger we make the bit map, and the more uniform the output of the hash function is, the less likely we get a collision. It should also be clear why you don't get false negatives. If any of the indexes are false, or 0, then the whole thing is false.

Now that we understand how a Bloom Filter works, let's go ahead and implement one of our own. For this project I will be using C++. I will make all of the code available on my GitHub.

class IBloomFilter {

public :

virtual void add(const std::string& s) = 0;

virtual bool contains(const std::string& s) const = 0;

virtual void clear() = 0;

virtual double getProbabilityOfFalsePositive() const = 0;

virtual unsigned int getCount() const = 0;

virtual unsigned long int getBitCount() const = 0;

protected :

virtual ~IBloomFilter(){};

};

Here is the interface that I created that defines what a Bloom Filter can do at a high level. Notice that there is no remove() function. This is because the same thing that can cause a false positive, can also cause multiple removals. Using the above example, if I tried to remove "Boston" I would also remove "Hello" and every other word that even partially maps to that sequence of bits.

I decided to code up the Bloom Filter in two different ways. A fixed size Bloom Filter where you specify the number of bits you want in the bitmap, and a dynamic Bloom Filter where you specify the number of elements you want to store, along with a worst case probability of false positives. Given this data it will generate a bit array of the proper size. First, lets take a look at the header for the FixedBloomFilter.

template <std::size_t N>

class FixedBloomFilter : public IBloomFilter {

std::unique_ptr<std::bitset<N>> bitmap;

unsigned long int m;

unsigned int c;

unsigned int k;

std::vector<uint32_t> seeds;

public :

FixedBloomFilter();

void add(const std::string& s);

bool contains(const std::string& s) const;

void clear();

double getProbabilityOfFalsePositive() const;

unsigned int getCount() const;

unsigned long int getBitCount() const;

};

For the fixed size filter, I decided to use the std::bitset, as it is the ideal structure to store bits in. Next I define a few needed values. m is the number of bits, c is the current count of elements stored in the filter, k is the number of hashes I run on each entry, and seeds will hold the seeds for those hashes so each hash produces a different result. For the FixedBloomFilter we know these values, so we don't need to calculate them, but in the DynamicBloomFilter we will calculate these on the fly.

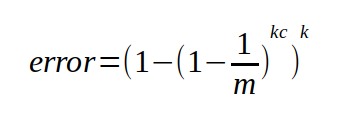

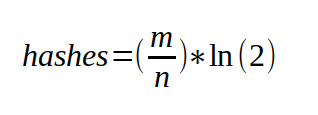

For both Bloom Filters, we will still calculate the error probability the same way using the following equation.

This will give us the worst case probably of getting a false positive. I say worst case because I found that in practice the real chance of false positives to be lower, sometimes by half or more. I suspect because there are certain "non-sane" value that will never be checked. For example, if my Bloom Filter is holding English words, and I want to compare the words in two books, chances are I will never check for gibberish words like "hvieioe", however this equation still accounts for that.

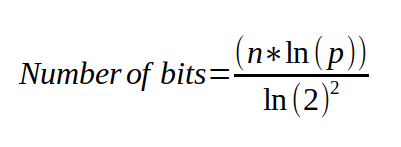

Now, for the DynamicBloomFilter I take into the constructor the maximum number of elements I expect the filter to hold, and the desired worst case probability that I want. Using those values, I can calculate the exact number of bits, and the number of hashes that I will need to fulfill these requirements.

class DynamicBloomFilter : public IBloomFilter {

unsigned long m;

unsigned int n;

double p;

unsigned int c;

std::vector<bool> bitmap;

std::vector<uint32_t> seeds;

public :

explicit DynamicBloomFilter(unsigned int n, double desired_p);

void add(const std::string& s);

bool contains(const std::string& s) const;

void clear();

double getProbabilityOfFalsePositive() const;

unsigned int getCount() const;

unsigned long int getBitCount() const;

};

To see the details of the implementation, check out my source code repo on github. For brevity, I'm just going to show the add and contains code here so you can see the implementation.

void DynamicBloomFilter::add(const std::string& s) {

const void* ptr = reinterpret_cast<const void*>(s.data());

std::for_each(seeds.begin(), seeds.end(), [=, this](const uint32_t& seed) {

uint32_t h = MurmurHash2(ptr, s.size(), seed);

bitmap[h%m] = true;

});

c++;

}

bool DynamicBloomFilter::contains(const std::string& s) const {

const void* ptr = reinterpret_cast<const void*>(s.data());

return std::all_of(seeds.begin(), seeds.end(), [=, this](const uint32_t& seed) {

uint32_t h = MurmurHash2(ptr, s.size(), seed);

return bitmap[h%m];

});

}

This code should look just like the picture that I showed above. For both adding and checking we hash the input value using the MurmurHash2() function. The only difference is that when adding, we set those bits to true (or 1), and when checking we just check that they are all true (or 1's).

Now that we have our two versions of the Bloom Filter, how do they perform? First lets take a look at the dynamic filter. This filter takes in two parameters, the expected number of elements to be stored in the filter, and the desired probability to a false positive. For my example, I am using the /usr/share/dict/cracklib-small, which is a small dictionary file containing 52,875 english words. My verification set is /usr/share/dict/american-english, which is a larger dictionary containing 102,305 words. When I create my new DynamicBloomFilter I set the max expected values to be 52875 and the desired error probability to be 0.01. Here are the results.

BloomFilter is 61.8663 K bytes Bloom Filter loaded with 52876 words Error probability: 0.0101441 Bloom Filter matched verification set 99.3783% Actual Error Probability: 0.00621664

As you can see, the DynamicBloomFilter was able to take in our parameters and create the optimal filter size, and hash count to achieve our desired probability. It was able to do it in just under 62K of memory. Keep in mind that my actual data set was 468K. So that is a 7.5X reduction in size, with 99.4% accuracy. Not too bad.

For the FixedBloomFilter we can get similar results as long as we use the correct size for the bitmap. Using the smallest size FixedSize::TINY, we only get an 8KB filter, and a terrible 76.98% accuracy on our test set. However once we jump up one level to the FixedSize::SMALL, now we have a 128KB filter, with 99.9% accuracy. However, compared to the Dynamic filter, we are double the size and don't gain much accuracy. Something to consider when choosing your implementation.

BloomFilter is 128 K bytes Bloom Filter loaded with 52876 words Error probability: 0.00111332 Bloom Filter matched verification set 99.9365% Actual Error Probability: 0.000635349

The DynamicBloomFilter is better when you know the size of the set your going to load into it, and just want to control for the error probability. The FixedBloomFilter is great when you have space constraints, or you don't know how much data your going to load, but just want to have a probabilistic way to knowing if you have "seen this before" (like for caching schemes). Both have their pros and cons, so I leave it to you to decide which way works better for your application. Ultimately in our application, we just chose a very large filter, we didn't know the upper limit of items we were going to add, and because even a few hundred megabytes was still gigabytes smaller then our actual data set.

“Website Reboot”

2020-09-26

Well, I would ask where the last 5 years went, but she is running around with a crown on pretending to freeze me because she just watched Frozen 2. However, I have decided to make the time to refresh my website, finally add SSL, update the stack it runs on, and change how I manage my blog. Overall I think this new, cleaner look is refreshing, and improves the aesthetics. One nice feature of my new stack is that now I can blog directly on my server, and not need to go to Tumblr. I can also iterate faster then with my old stack, which was built on Ruby.

Looking back at my analytics data, my most viewed blog posts have been my "teaching" posts. Specifically, my post on UDP has been my biggest hit. Given this my goal moving forward will be to do more posts on the subject of networking, threading, and other "complicated" topics. I really enjoy making posts like this because it has the fantastic side effect of showing me where my own knowledge is lacking. Many times I sit down to write a post and I realize that I only have a rudimentary idea of what I am trying to show. Usually I have learned enough about something in order to use it effectively in my job, but not necessary well enough to teach it to someone else. By forcing myself to teach it to someone else, I also fill in the gaps in my own knowledge and become a more well rounded engineer as a result.

During my hiatus from my website I was able to complete another video course for Packt publishing called C++ Standard Template Library in Practice. In it I cover the fundamentals and usage of the standard library, with a focus on things added in C++11, 14 and 17. I even give a C++20 preview. If your interested in learning how to use the standard library to make your C++ programs more concise, I recommend this course. It is also available on Udemy as well.

Last time I posted, I mentioned that I was heavily involved in Brazilian Jiu Jitsu, which is still very true to this day. In fact I started a club at my company, and it was even featured in a video. You can check it out on Youtube here.

Well, that's all for my update for now. I'm going to get to work on my next technical blog and see where that takes me. Hopefully I will get some posts out before my schedule becomes too crazy again. Thanks for checking out my new site!

“Where have you been?”

2015-08-01

It is hard to believe how quickly times flies! It has been over a year since I posted any updates to my blog, but I have been so busy it feels like yesterday that I was talking about Dart UDP support.

Well, first and foremost, I have competed my first video course with PACKT Publishing, It’s called Introducing Dart, and is a rapid paced tour of the Dart client side programming language features. It was written and designed to be used by people who may be familiar with other languages, but want to get up to speed on Dart ASAP. If you are interested in checking it out, it can be found here at the PACKT website.

I think these videos are a great learning tool because of the rapid no-nonsense nature of instruction. I don’t waste time with trivial details, and every little piece of syntax. We simply work together on creating a browser based game, and get things working step by step. Honestly, anyone can learn the syntax quickly enough by looking at the tutorial on the Dart homepage. But learning how to express your ideas with the language is a different challenge that I hope I can help you overcome.

Okay, so enough self promotion of now, in other news I have also been getting deeply involved in Jiu-Jitsu and submission wrestling. Besides the fact it is great exercise, it is just a super fun activity to do, and a great set of skills to learn. I like to think of it as the thinking mans fighting. It’s like playing chess, but the chess pieces are your limbs and neck.

Going forward I hope to keep up with this better, and make some more tutorials and such. I seem to be getting good at this whole Dart thing, so maybe I will continue with that.

So far I have covered a variety of sockets that are supported by Dart. Just recently the Dart developers added the ability to use UDP sockets to the dart:io library. So for this installment I will cover how to use Dart to send and receive data over UDP. I will start with an explanation of how UDP works, then move on to specific client and server examples in Dart. I will also present UDP multicasting in Dart, and cover some gotchas that come up when trying to work with it.

What is UDP?

UDP is the User Datagram Protocol. It is different from TCP (Transmission Control Protocol) in that it does not establish a connection to the destination. UDP is designed to send datagrams. Datagrams can be though of as discrete blocks of data or messages with limited overhead. UDP does not guarantee that the datagrams will be delivered in any specific order or even at all! So you might be asking, “Why do we use them if they are not guaranteed to arrive at their destination?”. Good question! They are very useful for certain types of data that does not need 100% reliability, and therefore it does not need the overhead that TCP imposes.

So what kind of data does not need to be reliably delivered? Say you are running a server that receives weather updates from hundreds of weather stations across the country once every second. That’s a lot of data, but more importantly each new message from a single weather station makes the previous message obsolete. In this case if we loose a few messages it’s not a big deal.

Other kinds of services that are transmitted over UDP are streaming video, voice over IP phone calls, DHCP, and multi-player online games.

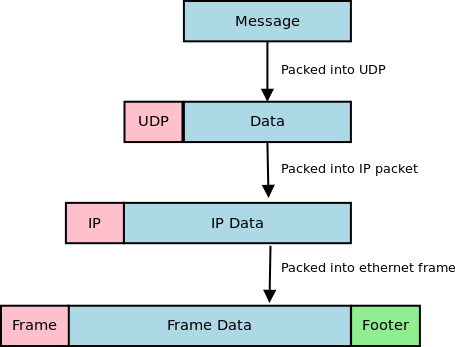

Close look at a UDP Datagram

A user datagram has a fixed 8 byte header. The header is very simple and contains four, 16 bit fields. The fields are the source port, destination port, total length, and a checksum. Let’s take a look at it as a C struct.

#include <stdint.h>

typedef struct {

uint16_t sourcePort;

uint16_t destPort;

uint16_t length;

uint16_t checksum;

} UDPHeader_t;

Now you can see how simple the UDP header really is. There is not much too it. Before the datagram is sent out it is first encapsulated into an IP packet. The IP packet has its protocol field set to 17 (0x11) to indicate that it is carrying a UDP packet. The IP header contains the source and destination addresses. The structure of an IPv4 IP header can be found here at Wikipedia.

Finally this IP packet will be encapsulated into an Ethernet frame. Since most routing equipment uses a 1500 byte MTU (Maximum transmission unit), a data payload that is sent using UDP should be kept at or below 1432 bytes (IP headers can be up to 60 bytes, and the UDP header is always 8 bytes).

On a side note, if your interested in more details about TCP/IP and the protocols that keep networks and the internet running, I recommend you check out TCP/IP Protocol Suite by Behrouz A. Forouzan. It contains a lot of detailed information about a ton of different protocols.

UDP Receiver (client) Example

Alright, now that we know how UDP works, let’s check out how to set up a UDP client to start receiving UDP packets. To get started we are going to use the RawDatagramSocket class in dart:io. One thing that should jump out at you as we go through these examples is that it doesn’t matter whether you are sending or receiving datagrams, you must still bind the socket. This is because datagram sockets are connectionless. Every sender is also a receiver by default and can receive messages. All of the below examples are tested against Dart 1.1.3.

import 'dart:io';

void main(List<String> args){

RawDatagramSocket.bind(InternetAddress.ANY_IP_V4, 4444).then((RawDatagramSocket socket){

print('Datagram socket ready to receive');

print('${socket.address.address}:${socket.port}');

socket.listen((RawSocketEvent e){

Datagram d = socket.receive();

if (d == null) return;

String message = new String.fromCharCodes(d.data).trim();

print('Datagram from ${d.address.address}:${d.port}: ${message}');

});

});

}

We start out by calling the RawDatagramSocket.bind() method to bind the socket to port 4444 on any available IPv4 Ethernet devices. This returns a Future<RawDatagramSocket>. Once the socket has been bound we can listen for any incoming datagram packets by registering an onData callback using the RawDatagramSocket.listen() method. One thing to note is that the onData callback gives us a RawSocketEvent object. To receive the actual datagram we must call RawDatagramSocket.receive() inside of the onData callback. The receive method will return null if there are no datagrams available so make sure you check for that state. The Datagram object returned by the receive() method contains the InternetAddress object with the details of the sender, and the data that was sent as a List<int>.

To test this application fire it up with Dart, and use netcat to send it some test packets.

## in terminal 1 $ dart udp_receive.dart Datagram socket ready to receive 0.0.0.0:4444 Datagram from 127.0.0.1:42811: Hello! Datagram from 127.0.0.1:42811: How are you doing? Datagram from 127.0.0.1:42811: This is receiving UDP packets! ## in terminal 2 $ nc localhost 4444 -u Hello! How are you doing? This is receiving UDP packets!

UDP Sender (server) Example

To create a program that sends datagrams, we basically do the same thing as before. We need to bind() the socket, then call the RawDatagramSocket.send(List<int> buffer, InternetAddress address, int port) method. The send method does not take a Datagram object like you might expect, instead the send() method takes the destination address and port directly. The address and port parameters tell the datagram where to go. Remember that this is a connectionless protocol so each time we want to send data we need to provide a destination.

import 'dart:io';

void main(List<String> args){

RawDatagramSocket.bind(InternetAddress.ANY_IP_V4, 0).then((RawDatagramSocket socket){

print('Sending from ${socket.address.address}:${socket.port}');

int port = 4444;

socket.send('Hello from UDP land!\n'.codeUnits,

InternetAddress.LOOPBACK_IP_V4, port);

});

}

Notice that the bind() method call takes 0 as the port number. This tells Dart that we don’t care what port we use to send the datagram, just pick the next one that is available. Also take note that I am setting the destination to InternetAddress.LOOPBACK_IP_V4. This causes the datagram to be sent to localhost. To test this out we can fire up the udp_receive.dart program from before and then run this program.

## Terminal 1 $ dart udp_receive.dart Datagram socket read to receive 0.0.0.0:4444 Datagram from 127.0.0.1:33083: Hello from UDP land! ## Terminal 2 $ dart udp_send.dart Sending from 0.0.0.0:33083

UDP can send and receive together

Since UDP is a connectionless protocol, a single UDP socket can be used to send and receive data. The bind() call establishes what port and address we can receive data on, and the send() call allows us to send data to anywhere we want. We can easily make a UDP echo server by combining the two.

import 'dart:io';

void main(List<String> args){

RawDatagramSocket.bind(InternetAddress.ANY_IP_V4, 4444).then((RawDatagramSocket socket){

print('UDP Echo ready to receive');

print('${socket.address.address}:${socket.port}');

socket.listen((RawSocketEvent e){

Datagram d = socket.receive();

if (d == null) return;

String message = new String.fromCharCodes(d.data);

print('Datagram from ${d.address.address}:${d.port}: ${message.trim()}');

socket.send(message.codeUnits, d.address, d.port);

});

});

}

In the above example, each time a Datagram is received, it is echoed back to the sender. The Datagram object carries the source InternetAddress, and source port that we can use in the send() method to return the message.

## Terminal 1 $ dart udp_echo.dart UDP Echo ready to receive 0.0.0.0:4444 Datagram from 127.0.0.1:57194: Now are are getting an echo Datagram from 127.0.0.1:57194: much more interesting! ## Terminal 2 $ netcat 127.0.0.1 4444 -u Now are are getting an echo Now are are getting an echo much more interesting! much more interesting!

Multicast

These Dart programs presented above all perform unicasting. Unicasting is a one-to-one transmission of data. There is a single source and a single destination. However using UDP sockets, we can also take advantage of multicasting. Multicasting opens us up to have a single source and multiple destinations. This is very convenient for certain applications like streaming media. The source program send datagram packets to a multicast group address. Each interested client then joins the multicast group and can receive the datagrams being sent.

The source that is sending the multicast datagrams has an easy time, all that is necessary is to send the packets to a multicast group address instead of a normal destination address. Multicast addresses are in the range of 224.0.0.0/4. That is all IP address from 224.0.0.0 to 239.255.255.255.

/*

Multicast UDP broadcaster

multicast_send.dart

*/

import 'dart:io';

import 'dart:async';

import 'dart:math';

void main(List<String> args){

InternetAddress multicastAddress = new InternetAddress('239.10.10.100');

int multicastPort = 4545;

Random rng = new Random();

RawDatagramSocket.bind(InternetAddress.ANY_IP_V4, 0).then((RawDatagramSocket s) {

print("UDP Socket ready to send to group "

"${multicastAddress.address}:${multicastPort}");

new Timer.periodic(new Duration(seconds: 1), (Timer t) {

//Send a random number out every second

String msg = '${rng.nextInt(1000)}';

stdout.write("Sending $msg \r");

s.send('$msg\n'.codeUnits, multicastAddress, multicastPort);

});

});

}

To receive multicast content, extra steps must be taken to join the multicast group that you want to receive packets from. To join a multicast group you must issue an IGMP join command. To do this in Dart, you can use the RawDatagramSocket.joinMulticast(InternetAddress group, {NetworkInterface interface}) method. Another step that must be taken is to add a multicast route to your local routing table. Most people forget this step and can’t figure out why they are not receiving any packets. On Linux (Fedora and Ubuntu) you can add the appropriate route with the command sudo route add -net 224.0.0.0/4 dev eth0. Of course you should replace eth0 with whatever your device is actually called (on my fedora install my primary nic device is p34p1).

/*

Multicast UDP client

multicast_receive.dart

*/

import 'dart:io';

void main(List args){

InternetAddress multicastAddress = new InternetAddress("239.10.10.100");

int multicastPort = 4545;

RawDatagramSocket.bind(InternetAddress.ANY_IP_V4, multicastPort)

.then((RawDatagramSocket socket){

print('Datagram socket ready to receive');

print('${socket.address.address}:${socket.port}');

socket.joinMulticast(multicastAddress);

print('Multicast group joined');

socket.listen((RawSocketEvent e){

Datagram d = socket.receive();

if (d == null) return;

String message = new String.fromCharCodes(d.data).trim();

print('Datagram from ${d.address.address}:${d.port}: ${message}');

});

});

}

Trouble shooting multicast

Multicast has a lot of moving parts. If you are trying to run these examples and are unfamiliar with using multicast it can be frustrating to get things functional. The first thing you should check is that you are transmitting the multicast packets from the network card you intended. If you only have one network card then you can skip this step, but if you have more then one card you can add the route to the routing table so that all traffic is sent from the correct card.

On the receiving side, you will first want to make sure your firewall is not blocking multicast traffic. Fedora Linux ships with a firewall that blocks this traffic, so if you are using Fedora and are familiar with iptables you can either add the correct rule to allow the traffic, or just run sudo iptables -F to flush the firewall rules and let all traffic through.

Next you will want to make sure you have the multicast route correctly set up. On Linux you can use the command route -n to view your routing table. It should look like the one below.

$ route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.1.1 0.0.0.0 UG 0 0 0 eth0 169.254.0.0 0.0.0.0 255.255.0.0 U 1000 0 0 eth0 192.168.1.0 0.0.0.0 255.255.255.0 U 1 0 0 eth0 224.0.0.0 0.0.0.0 240.0.0.0 U 0 0 0 eth0

If you don’t have a line in your routing table like the last line on the example above you can add it with the command sudo route add -net 224.0.0.0/4 dev eth0. This route is necessary to properly receive multicast traffic through the specified device.

Next you will want to check if the OS has issued the IGMP join for the group address. To do this on Linux you can run cat /proc/net/igmp and you will see output like the one shown below. Make sure you are actively running the multicast_receive.dart program first.

$ cat /proc/net/igmp

Idx Device : Count Querier Group Users Timer Reporter

1 lo : 1 V3

010000E0 1 0:00000000 0

2 eth0 : 2 V3

640A0AEF 1 0:00000000 0

FB0000E0 1 0:00000000 0

010000E0 1 0:00000000 0

In the second column you will see the device names of your network cards. You will want to find the rows under the card you have set your multicast route on. Under it you will see a column called “Group” which contains 8 characters. These characters are hex numbers (4 octets). In the first group of the above example, if you take each pair of 2 hex digits you get 64 0A 0A EF. If you convert those to decimal you will see the numbers are 100 10 10 239, which is our multicast group address in reverse order. If you see a line like this then you know the OS has issued the join for the group.

Finally you will want to make sure that your router can handle multicast traffic and that it is properly configured. More advanced routers have a feature called IGMP snooping, where they can intelligently route multicast packets only to those computers interested in receiving such traffic. Other, simpler routers simply forward the multicast packets to every destination. I am using a Linksys router with dd-wrt firmware installed and have no problem with multicast. I am sure your routers can also handle the traffic unless it is very old or mis-configured.

Multicast and the Internet

I should also make you aware that you can’t send multicast traffic over the public internet. All multicast packets are dropped by ISP routers. Supposedly Internet 2 does allow multicasting, but it is still in its very early stages and mostly deployed at universities. So multicast is really only good for broadcasting inside private networks. Keep that in mind before designing any software that relies on a multicast solution.

Wrapping up the Dart socket tour

Well I hope you have enjoyed this multi-part tour of socket programming with Dart. We have covered all of the sockets that are supported by the language. If you have missed any installments you can check out TCP/IP socket programming here, Websocket programming here, and Secure socket programming here. On the next installment of the Dart socket series I will cover some miscellaneous functionality that can be used to support sockets, and make more robust socket based programs. If you have any questions or feed back about the code above, feel free to leave a comment.

“WebSocket programming with Dart 1.1”

2014-01-27

WebSockets are the new hotness when programming client side web applications. They eliminate the need for AJAX polling and other annoying workarounds that have been employed to make up for the fact that HTTP is a request-response protocol. WebSockets provide a full-duplex (two way) communications channel over a single TCP connection. They look and feel just like any other TCP socket, but are initiated over a standard HTTP upgrade request. This prevents any issues with firewalls and the like. If you can open a web page, you can use WebSockets (assuming your browser supports it).

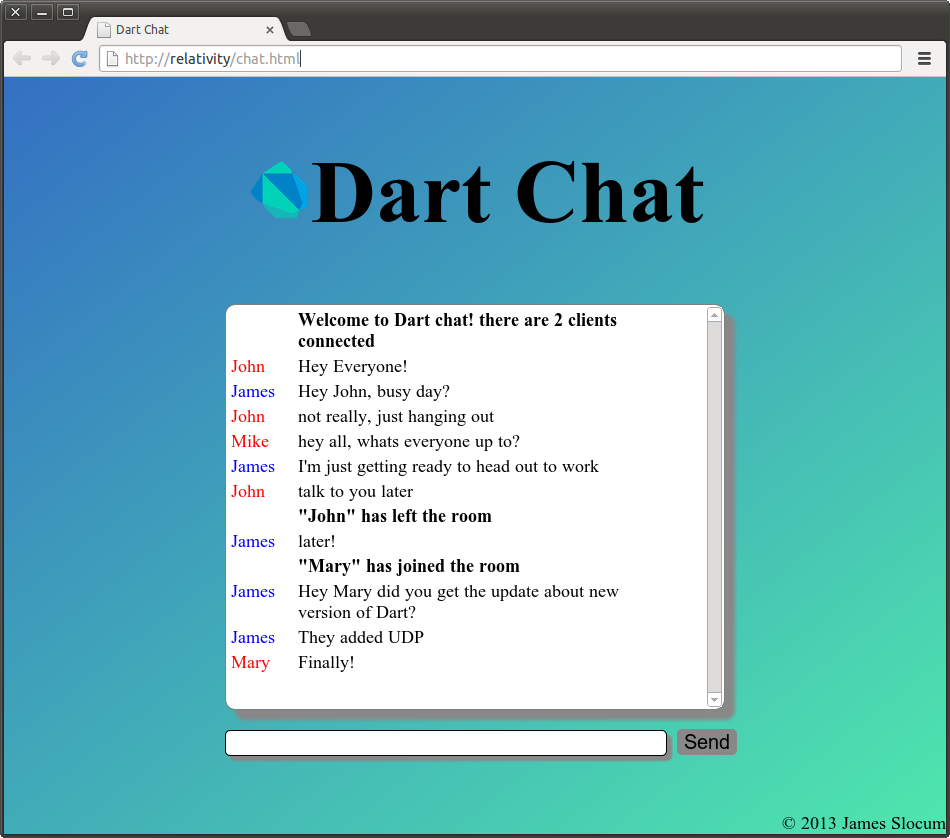

In this installment, I will be covering Dart WebSockets stem to stern. I will show how to set up a WebSocket server. How to connect from both a CLI client and web browser, and wrap it up with a small demo called Dart Chat. The version of Dart I am using is Dart VM version: 1.1.1 (Wed Jan 15 04:11:49 2014) on “linux_x64”.

What are WebSockets?

As stated previously, WebSockets are a protocol that allows for a full-duplex connection to be made between a client side web application, and a web server. HTTP is a simple protocol. The client makes a request for some resource, and the server replies with that resource, or an error. If a user wants to receive “notifications” from a server they could either poll (make requests at some set interval) a resource URI, or for newer applications, can take advantage of server sent events. Don’t get me wrong, these methods have their place and do work for a majority of applications, but what do you do if you need two way real-time updating? This is where WebSockets find their niche. Server sent events are great for applications that use a publish-subscribe architecture (like twitter feeds). WebSockets are great for two way real time updates where the state of the application can change on both the client and server in a significant way. The most common place to find WebSockets is in multi player games.

A Look at an Upgrade Transaction

WebSocket connections are made through a WebSocket handshake request. The request is just a regular HTTP request with an upgrade flag in the header. Along with the upgrade the client request will carry a Sec-WebSocket-Key. This key is a base64 encoded random value. This value is used by the server to generate a Sec-WebSocket-Accept response. The string it sends back is the decoded key with the magic string 258EAFA5-E914-47DA-95CA-C5AB0DC85B11 appended to it. The resulting string is hashed with SHA-1 and re-encoded into base64. Below is the transaction between the WebSocket echo server, and a local client captured using Wireshark.

# Request from client to server GET / HTTP/1.1 Request Method: GET Request URI: / Request Version: HTTP/1.1 Upgrade: websocket Connection: Upgrade Host: relativity:8080 Origin: null Pragma: no-cache Cache-Control: no-cache Sec-WebSocket-Key: 2YeHa81dWUbaxoD6kxPsmg== Sec-WebSocket-Version: 13 Sec-WebSocket-Extensions: x-webkit-deflate-frame User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.39 (Dart) Safari/537.36 # response from server HTTP/1.1 101 Switching Protocols Request Version: HTTP/1.1 Status Code: 101 Response Phrase: Switching Protocols server: DartEcho (1.0) by James Slocum connection: Upgrade upgrade: websocket sec-websocket-accept: 6C4pHheu6O23Vk894/B30FrzYzo= content-length: 0

WebSocket Server

Enough theory, lets get to some practice! In Dart, setting up a WebSocket server starts by setting up a regular web server. Since the WebSocket connection comes in as an HTTP upgrade request, we need to check the request header for the value of the upgrade flag. The WebSocketTransformer class provides a helper method to check this for you called isUpgradeRequest(HTTPRequest request). Once you know you have an upgrade, you can send the request to the WebSocketTransformer.upgrade(HttpRequest request) method to convert it into a WebSocket object.

import 'dart:io';

import 'dart:convert';

void main() {

HttpServer.bind(InternetAddress.ANY_IP_V4, 8080).then((HttpServer server) {

print("HttpServer listening...");

server.serverHeader = "DartEcho (1.0) by James Slocum";

server.listen((HttpRequest request) {

if (WebSocketTransformer.isUpgradeRequest(request)){

WebSocketTransformer.upgrade(request).then(handleWebSocket);

}

else {

print("Regular ${request.method} request for: ${request.uri.path}");

serveRequest(request);

}

});

});

}

void handleWebSocket(WebSocket socket){

print('Client connected!');

socket.listen((String s) {

print('Client sent: $s');

socket.add('echo: $s');

},

onDone: () {

print('Client disconnected');

});

}

void serveRequest(HttpRequest request){

request.response.statusCode = HttpStatus.FORBIDDEN;

request.response.reasonPhrase = "WebSocket connections only";

request.response.close();

}

In this code from sampleserver.dart we can see that a regular HTTP server is started in main(). The server listens for incoming requests and if it gets one, it looks to see if it is an upgrade. If this is the case it is passed to the WebSocketTransformer.upgrade() method. This method returns a Future<WebSocket> object that can we waited on. When it is ready we send it to the handleWebSocket(WebSocket socket) function to have the onData() and onDone() callbacks registered. When a message is sent as text, the onData() callback will receive a String, but when it is sent as binary (like an image file) it will receive a List<int>.

If the request is not a WebSocket request, then we simply reject it with a forbidden (403) return code. Of course you are welcome to expand on this part of the application and serve anything you want. I just felt it was simpler to isolate the WebSocket code for this example.

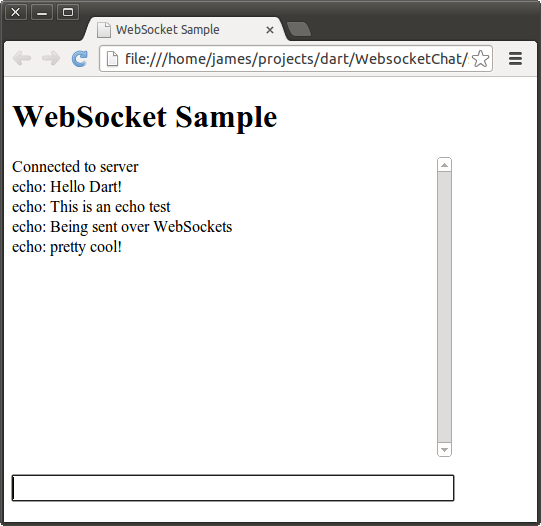

Browser WebSocket Client

The first WebSocket client that I am going to show is a browser client using the dart:html library. This will require the use of some HTML and CSS. To get started I will write up the HTML page called sampleclient.html that will hold the input TextInputElement and the output ParagraphElement. The HTML and CSS are pretty straight forward so you should not have too much of an issue following them.

<!DOCTYPE html>

<html>

<head>

<title>WebSocket Sample</title>

<link rel="stylesheet" href="sampleclient.css"></link>

</head>

<body>

<h1>WebSocket Sample</h1>

<p id="output">

</p>

<input id="input" type="text"></input>

<script type="application/dart" src="sampleclient.dart"></script>

<script src="packages/browser/dart.js"></script>

</body>

</html>

Next is the sampleclient.css style sheet to apply a very basic style to the page.

#input {

width: 440px;

font-size: 18px;

border-style: solid;

border-style: black;

border-width: 1px;

}

#output {

width: 440px;

height: 300px;

font-size: 16px;

overflow-y: scroll;

}

Finally, let’s take a look at the Dart source in sampleclient.dart.

import 'dart:html';

void main() {

TextInputElement input = querySelector('#input');

ParagraphElement output = querySelector('#output');

String server = 'ws://localhost:8080/';

WebSocket ws = new WebSocket(server);

ws.onOpen.listen((Event e) {

outputMessage(output, 'Connected to server');

});

ws.onMessage.listen((MessageEvent e){

outputMessage(output, e.data);

});

ws.onClose.listen((Event e) {

outputMessage(output, 'Connection to server lost...');

});

input.onChange.listen((Event e){

ws.send(input.value.trim());

input.value = "";

});

}

void outputMessage(Element e, String message){

print(message);

e.appendText(message);

e.appendHtml('<br/>');

//Make sure we 'autoscroll' the new messages

e.scrollTop = e.scrollHeight;

}

To open a WebSocket connection to the server, I simply call the WebSocket constructor with the URI of the server. The dart:html WebSocket class provides several specific events that can be listened for. The onOpen event is called when the WebSocket connection has been established. The onMessage event is called every time data is available to read from the socket. The onClose Event is called when the server has shutdown, and the socket is no longer available. I hook each of these events up to the outputMessage() function that will output what the server has sent to a ParagraphElement object.

To test this application out, simple run the sampleserver.dart program, and then open sampleclient.html in dartium. Don’t forget to run pub install first to get the bootstrap if you are using my example code.

$ dart sampleserver.dart HttpServer listening... Client connected! Client sent: Hello Dart! Client sent: This is an echo test Client sent: Being sent over WebSockets Client sent: pretty cool! Client disconnected

A Command Line WebSocket Client

WebSockets are not limited to the client side! It’s quite easy to open a WebSocket connection from a command line application using the dart:io WebSocket class. The WebSocket in dart:io works a bit differently then the one in dart:html. Let’s take a look at the command line client code, and then I will go over some differences.

import 'dart:io';

WebSocket ws;

void main(List<String> args){

if (args.length < 1){

print('Please specify a server URI. ex ws://example.org');

exit(1);

}

String server = args[0];

//Open the websocket and attach the callbacks

WebSocket.connect(server).then((WebSocket socket) {

ws = socket;

ws.listen(onMessage, onDone: connectionClosed);

});

//Attach to stdin to read from the keyboard

stdin.listen(onInput);

}

void onMessage(String message){

print(message);

}

void connectionClosed() {

print('Connection to server closed');

}

void onInput(List<int> input){

String message = new String.fromCharCodes(input).trim();

//Exit gracefully if the user types 'quit'

if (message == 'quit'){

ws.close();

exit(0);

}

ws.add(message);

}

The first major difference that should pop out at you is that the dart:io WebSocket is created and connected using the WebSocket.connect() method that returns a Future<WebSocket>. The dart:html WebSocket is created using its constructor. The HTML version of the WebSocket also exposes several separate event hooks for receiving messages, knowing when a connection is made, and knowing when a connection is closed. The io version provides the “classic” listen(void onData(T event), {Function onError, void onDone(), bool cancelOnError}) interface for registering callbacks. The HTML version also has a send() method to transmit data, while the io version has add(). There is currently an open ticket for unifying these WebSocket interfaces.

$ dart sampleserver.dart HttpServer listening... Client connected! Client sent: Hello from the command line Client sent: Now I can create one type of socket, and use it for applications and webapps Client sent: hurray! Client disconnected $ dart samplecliclient.dart ws://localhost:8080 Hello from the command line echo: Hello from the command line Now I can create one type of socket, and use it for applications and webapps echo: Now I can create one type of socket, and use it for applications and webapps hurray! echo: hurray! quit

Project Files and Further Reading

The full source for these sample files can be found here. Also included is a WebSocket based chat application called DartChat. To run the applications un-tar the archive and run the command pub install. This will install all of the application dependencies, and the bootstrap script. From there feel free to use the sampleserver.dart to run the simple echo server, or run server.dart to run the full chat server. To run the chat client, open chat.html in dartium. If you have any questions about the functionality of the chat system feel free to leave a comment below!

$ tar -zxvf dart_websockets.tar.gz $ pub install $ dart server.dart & $ dartium chat.html

In the next installment, I will be covering the freshly released RawDatagramSocket class that will handle UDP communications in Dart.

“Secure Sockets and Servers with Dart 1.0”

2013-12-14

My last post on Socket Programming with Dart was a huge hit and I got some requests to follow it up with a websockets version. I am more then happy to oblige, but before I can dive into websockets and the Dart HTTP API stack, I want to first show how to set up and use secure sockets. Why cover secure sockets? Well if we are going to write a web facing chat server I think those messages should be encrypted.

Dart secure sockets and secure servers use SSL/TSL security. More specifically they rely on X.509 certificates to validate servers and (optionally) clients. The server will hand out a certificate that will verify itself to the client. If the client accepts the certificate it will exchange symmetric session keys used to encrypt the communications between them. In a production environment the X.509 certificate is signed and verified by a 3rd party called a certificate authority. Web browsers usually have a list of trusted authorities that they will accept signatures from.

In our development environment, we are going to create self signed certificates for our secure severs. Does self signed mean insecure? No, the data will still be encrypted as one would expect, but our clients will get a warning that we are not recognized as a valid signing authority. In fact it’s funny looking around the web at how many large we companies are trusted as valid authorities, and self sign their certificates. Google is a good example of this, check out https://google.com and you will see the certificate is verified by Google Inc.

The Dart implementation of SSL/TSL security uses a Network Security Services (NSS) database to store the server private key and certificate in. Before we begin programming and using secure sockets with Dart we must first set up the database.

Setting up an NSS Key Database

Network Security Services or NSS is a set of libraries and tools to create secure server and client applications. It is provided by the Mozilla Foundation and is used in many of their products. To start setting up the database, you must first install the NSS tools. On Ubuntu it’s simply sudo apt-get install libnss3-tools. On Fedora 17 you can use sudo yum install nss-tools. On OSX you can use brew install nss . For more specific installion instructions you can check out The Mozilla wiki (look at part 3).

Once you have the tools installed, you can use the command line application called certutil to create a new key database, and create a self signed development certificate. To create the database, use the commands

$ echo "[my secret password]" > pwdfile $ certutil -N -d 'sql:./' -f pwdfile $ ls pwdfile cert9.db key4.db pkcs11.txt

Let’s break down this command. The -N flag says to create a new database. The -d 'sql:./' flag says to create a new cert9.db and key4.db file in the current directory. The “sql:” prefix is necessary to indicate that you want the newer format. If you forget the “sql:” prefix then you will get the old cert8.db and key3.db files, which are not compatible with Dart! The -f pwdfile flag says to use the file ‘pwdfile’ as a password file. Before you create the database, you should echo a password into a password file to use for the rest of the commands.

Okay, so now we have an empty key database. Let’s create a self signed certificate so we can start developing our application. In a production environment we would create a certificate request, then send the request to some certificate authority to have a certificate issues. Once the certificate is issued we would load it into the NSS database. However that is more work (and cost) than is needed for a simple development project. Creating a self signed certificate will still let us experiment, but will throw a warning to our connecting clients (like web browsers).

$ certutil -S -s "cn=dartcert" -n "self signed for dart" -x -t "C,C,C" \ -m 1000 -v 120 -d "sql:./" -k rsa -g 2048 -f pwdfile A random seed must be generated that will be used in the creation of your key. One of the easiest ways to create a random seed is to use the timing of keystrokes on a keyboard. To begin, type keys on the keyboard until this progress meter is full. DO NOT USE THE AUTOREPEAT FUNCTION ON YOUR KEYBOARD! Continue typing until the progress meter is full: [********** ]

There are a ton of flags in that last command, lets break them down. The -S flag makes a certificate and adds it to the database. The -s "cn=dartcert" flag specifies the subject line, and sets the common name to “dartcert”. -n "self signed for dart" sets the nickname of the certificate. -x tells it to self sign, and -t "C,C,C" sets the certificates trust attributes. In our case we are setting all three of attributes to “trusted CA to issue server certs.” The -v 120 flag makes the certificate valid for 120 months. Finally the -k rsa -g 2048 flags tell certutil to use an RSA algorithm with a 2048 bit key. Feel free to use a stronger key, up to 8192 bits.

After you run the command, you will be prompted to mash the keyboard to generate some entropy to seed the algorithm. Now your certificate is ready for use. You can view the certificates in the database with the command certutil -L -d 'sql:./'. Keep in mind that this is the bare minimum you need to create a self signed certificate. For more options you can use the command certutil -H, or check out some examples at the Mozilla Foundation NSS page.

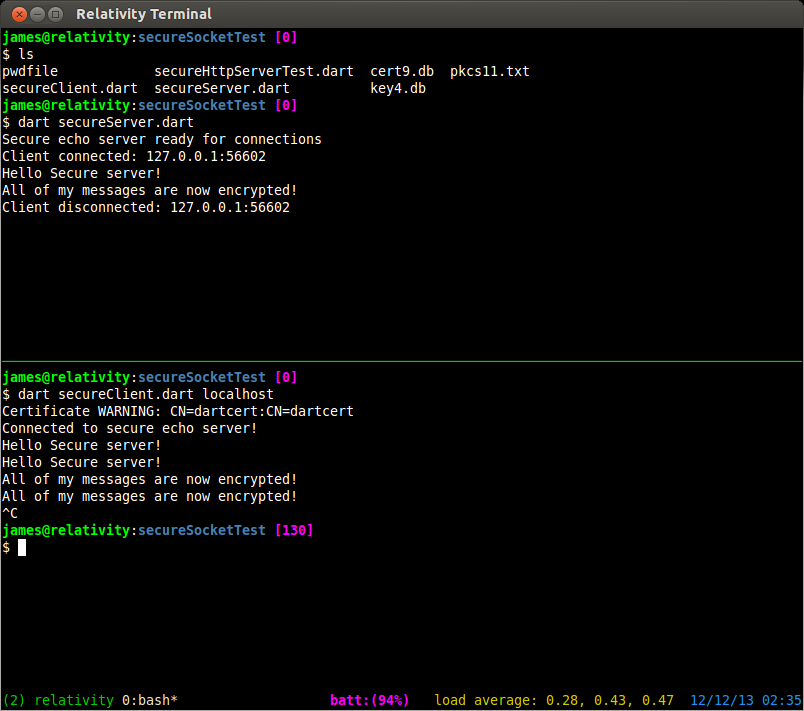

Creating a Secure Server

Alright! Now that we have our database set up and a certificate that we can use lets get to some coding. To get stated we are going to work with the SecureServerSocket and SecureSocket classes.

import 'dart:io';

void main() {

String password = new File('pwdfile').readAsStringSync().trim();

SecureSocket.initialize(database: "./",

password: password);

SecureServerSocket.bind(InternetAddress.ANY_IP_V4, 4777, "CN=dartcert")

.then((SecureServerSocket ss) {

print("Secure echo server ready for connections");

ss.listen((SecureSocket sClient) {

print("Client connected: "

"${sClient.remoteAddress.address}:${sClient.remotePort}");

sClient.write("Connected to secure echo server!");

sClient.listen((List data) {

String message = new String.fromCharCodes(data).trim();

print(message);

sClient.write(message);

},

onDone: () {

print("Client disconnected: "

"${sClient.remoteAddress.address}:${sClient.remotePort}");

sClient.destroy();

});

},

onError : (error){

print(error);

});

});

}

The first thing that needed to be done was get connected to the NSS database. since I have stored the password to the database in a file, I will simply read the password from that file in my server program. To do that I use File.readAsStringSync(). The “Sync” tells dart to make a blocking read on that file, and prevent the rest of the program from running until it’s complete. To open up the database I use SecureSocket.initialize() to tell Dart where to find it, and the password to use to get in. To create a secure server socket I use SecureServerSocket.bind() and pass it three parameters. The first is what interface to listen on. Just like last time I want to listen on all of them. The second is what port to bind to. Any port above 1024 is okay to use (as long as some other program hasn’t bound to it first). The third parameter tells Dart what certificate to use to establish new secure connections. The name I use is exactly the same as the name I provided to certutil when I created the certificate.

From this point forward, everything should look familiar. The code is exactly the same as the regular socket code. You listen() for new connections, and handle them accordingly.

Connecting to a Secure Server

So now we have our secure server, how do we securely connect a new client? We cant just use telnet anymore because of the TSL handshake that establishes the secure connection. We need to write our own client for this server.

import 'dart:io';

void main(List<String> args) {

if (args.length < 1){

print("Please specify a hostname or IP");

return;

}

String host = args[0];

SecureSocket socket;

SecureSocket.connect(host, 4777, onBadCertificate: (X509Certificate c) {

print("Certificate WARNING: ${c.issuer}:${c.subject}");

return true;

}).then((SecureSocket ss) {

socket = ss;

socket.listen((List data) {

String message = new String.fromCharCodes(data).trim();

print(message);

},

onDone: () {

print("Lost connection to server");

socket.destroy();

});

});

stdin.listen((List data) {

String input = new String.fromCharCodes(data).trim();

socket.write(input);

});

}

This should also look pretty familiar for the most part. The only real difference between this program, and one that uses regular sockets is the onBadCertificate callback. To connect to the server we simply use SecureSocket.connect() and pass it a host or IP address, and a port number. The onBadCertificate callback must return a bool that indicates whether to accept or reject a “bad” certificate. Our self signed certificate will trigger this callback, so we need to return true in order to use this client with our current certificate. If you happen to have a valid certificate issued from a trusted certificate authority you should not have this issue.

Creating a secure HTTP server

Creating a secure HTTP server is as easy as creating a secure socket. The only difference is that we use the HttpServer.bindSecure() method. We still specify what devices to listen on, what port to use, and the name of our certificate. We also still need to use the SecureSocket.initalize() method to tell our program where to find our certificate database.

import 'dart:io';

void main(){

String password = new File('pwdfile').readAdStringSync().trim();

SecureSocket.initialize(database: "./",

password: password);

HttpServer.bindSecure(InternetAddress.ANY_IP_V4, 4443,

certificateName: "CN=dartcert")

.then((HttpServer server) {

server.listen((HttpRequest request) {

print('Request for ${request.uri.path}');

request.response.write(

"<html><body><h1>"

"Hello [REDACTED]!"

"<//h1><//body><//html>");

request.response.close();

});

});

}

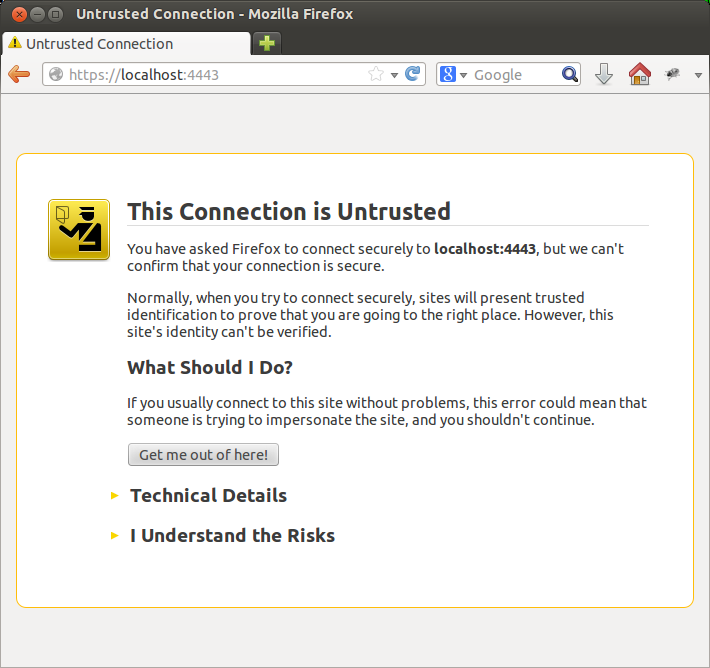

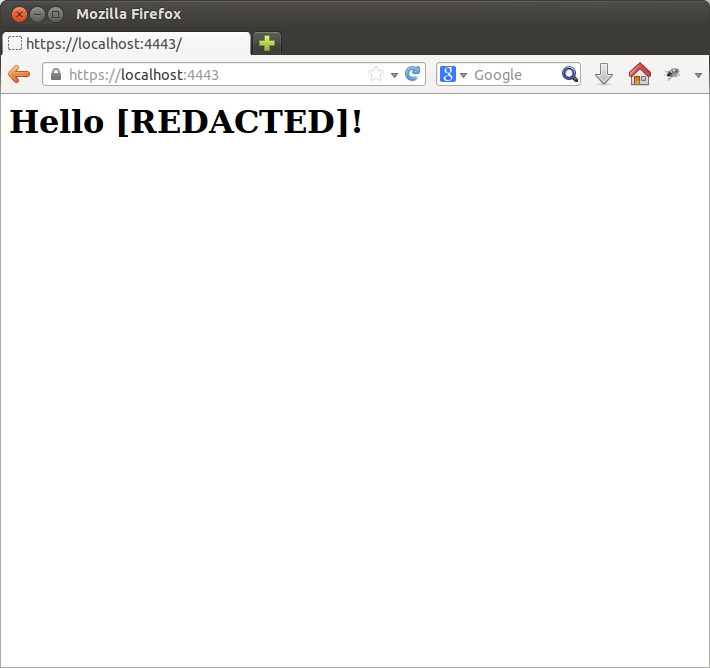

Now you can fire up an instance with dart secureHttpServer.dart and point your browser to http://localhost:4443. You can add an exception for your self signed certificate. Normally I would NEVER advocate accepting a certificate exception, but since it’s ours, and it’s localhost, there’s no risk.

$ dart secureHttpServer.dart Request for / Request for /favicon.ico Request for /favicon.ico

Now that we have gotten that out of the way, next time I will present another chat server and client implemented with Dart websockets. I must admit I was pleasantly surprised by how natural and effortless it is to program in Dart on the client side! Stay tuned for the next part in the series.

“Network Socket Programming with Dart 1.0”

2013-11-20

It’s hard to believe that I started writing about Google Dart a year ago this November, and almost like clock work Dart SDK 1.0 was released on November 14th. With the release of version 1.0 comes a full web development environment that rivals what is currently available with Javascript. While the client side web stuff is super cool and powerful, I love that it has server side utilities as well. It’s kinda like using Javascript in the browser and Node.js on the server. Dart is a complete package!

To kick off the release of version 1.0 I’m going to run a series on Dart. I am not sure where it will go, but my goal is to cover classes and topics not really covered by other sites. For this installment I am going to cover socket programming in Dart on the server side.

To prevent another fiasco I will disclose that I am using the Dart VM 1.0.0.3_r30188 on 64 bit Ubuntu Linux. All of the below code was written for and tested against that version. If you have any issues check your version first, then leave me a comment below. Let’s dive in!

What is a network socket?

In the broad sense, a socket is an endpoint to an interprocess communications connection across a network. They are usually implemented in the transport layer of the OSI model. For this exercise you can simply think of them as an interface to get your program sending and receiving data across a network, or over the Internet. Dart socket objects are implemented on TCP/IP. Dart does not support UDP at the moment Edit: UDP is now supported and covered on this page. Other types of sockets exist, such as Unix domain sockets and websockets, but those won’t be covered here.

In this article we are concerned with two classes from the dart:io API. the first is Socket which we can use to establish a connection to a server as a client. The second is ServerSocket which we will use to create a server, and accept client connections.

Client connections

The Socket class has a static method called Connect(host, int port). The host parameter can be either a String with a host name or IP address, or an InternetAddress object. Connect will return a Future<Socket> object that will make the connection asynchronously. To know when a connection is actually made, we will register a Future.then(void onValue(T value)) callback. Lets take a look.

/*

file: socketexample.dart

Author: James Slocum

*/

import 'dart:io';

void main() {

Socket.connect("google.com", 80).then((socket) {

print('Connected to: '

'${socket.remoteAddress.address}:${socket.remotePort}');

socket.destroy();

});

}

In the above example we opened a connection to google.com on port 80. Port 80 is the port that serves web pages. After the socket is connected to the server, the IP and port that it is connected to are printed to the screen and the socket is shutdown. By shutting down the socket using Socket.destroy() we are telling dart that we don’t want to send or receive any more data on that connection. When you run this program (assuming you have a connection to the internet and DNS is working properly) you should see a similar output to the one below.

$ dart socketexample.dart Connected to: 173.194.43.36:80

You see, nothing too it! Dart does all of the heavy lifting of looking up the IP for google.com, and establishing the connection over TCP. All your code has to do is sit back and wait. Lets take it a step further. Lets request the index page from google after we have connected. In order to accomplish this we must do two things. First we have to send a request for the page, and second we must have a way of receiving the response. In order to send data over a socket we have to use the Socket.write(String data) method. To receive data we have to register an onData() callback using the Socket.listen(void onData(data)) method. For this example we will also register an optional onDone() callback to let us know when the server has closed the connection.

/*

file: getindexexample.dart

author: James Slocum

*/

import 'dart:io';

void main() {

String indexRequest = 'GET / HTTP/1.1\nConnection: close\n\n';

//connect to google port 80

Socket.connect("google.com", 80).then((socket) {

print('Connected to: '

'${socket.remoteAddress.address}:${socket.remotePort}');

//Establish the onData, and onDone callbacks

socket.listen((data) {

print(new String.fromCharCodes(data).trim());

},

onDone: () {

print("Done");

socket.destroy();

});

//Send the request

socket.write(indexRequest);

});

}

Note: This program is just to illustrate the use of Sockets and how they work. I would not advocate connecting to an HTTP server using raw TCP sockets. Dart offers an HttpClient class that provides much more functionality.

When you run the above code, you should see the HTTP/1.1 response headers followed by the contents of the index page. This little application can be a great tool for learning about web protocols. You can also see exactly what cookies are being set, and their values.

$ dart getindexexample.dart Connected to: 173.194.43.38:80 HTTP/1.1 200 OK Date: Tue, 19 Nov 2013 02:05:33 GMT Expires: -1 Cache-Control: private, max-age=0 Content-Type: text/html; charset=ISO-8859-1 ... ... (headers and HTML code) ... </script></body></html> Done

Server Sockets

As you can see, making remote connections to a server is easy when you use the Dart Socket object. So what do you do if you want remote clients to connect to you? For that we can use the ServerSocket object. In order to create a server that can handle client connections, we must first bind to a specific TCP port that we will listen on. To do this we can use the static ServerSocket.bind(address, int port) method. This will return a Future<ServerSocket>. Once again we will use the Future.then(void onValue(T value)) method to register our callback so we know when the socket has been bound to the port. Make sure to choose a port higher then 1024. Ports lower then that are in the reserved range and may require root or administrator permissions to bind. In my examples I use port 4567.

/**

file: serverexample.dart

author: James Slocum

Simple server that will

1) accept a new connection

2) say hello

3) close the connection

*/

import 'dart:io';

void main() {

ServerSocket.bind(InternetAddress.ANY_IP_V4, 4567).then(

(ServerSocket server) {

server.listen(handleClient);

}

);

}

void handleClient(Socket client){

print('Connection from '

'${client.remoteAddress.address}:${client.remotePort}');

client.write("Hello from simple server!\n");

client.close();

}

You should notice a big difference from last time. Instead of listening for binary data from our connection, we are listening for client connections. When we get a connection, it calls our handleClient(Socket client) function with a Socket object that represents the client connection. Going forward we will use this client socket object to send data to, and receive data from this specific client. To run this example we need to use two terminals. In the first on you can run the server, and in the second you can use telnet as the client application.

#First terminal $ dart serverexample.dart Connection from 127.0.0.1:47347 #After we run telnet in another terminal #Second terminal $ telnet localhost 4567 Trying 127.0.0.1... Connected to localhost. Escape character is '^]'. Hello from simple server! Connection closed by foreign host.

I also want to point out the use of InternetAddress.ANY_IP_V4 which tells the ServerSocket that it’s free to accept connections on any device that supports IPv4. In my case that is my ethernet card, my wireless card, and my loopback device (localhost). If you want to bind to a specific ethernet device, you can use a string with the IP address of that device and it will be the only one that can receive connections for the specified port.

You will notice when you run the above server example that it does not exit after it closes the client connection. In fact it will keep accepting new clients, sending them the hello string, and closing the connection. That is because of Darts asyncronous io model. The nice part about this is that we don’t have to spawn any threads or explicitly tell the server socket to start listening for another connection. It does this all on its own!

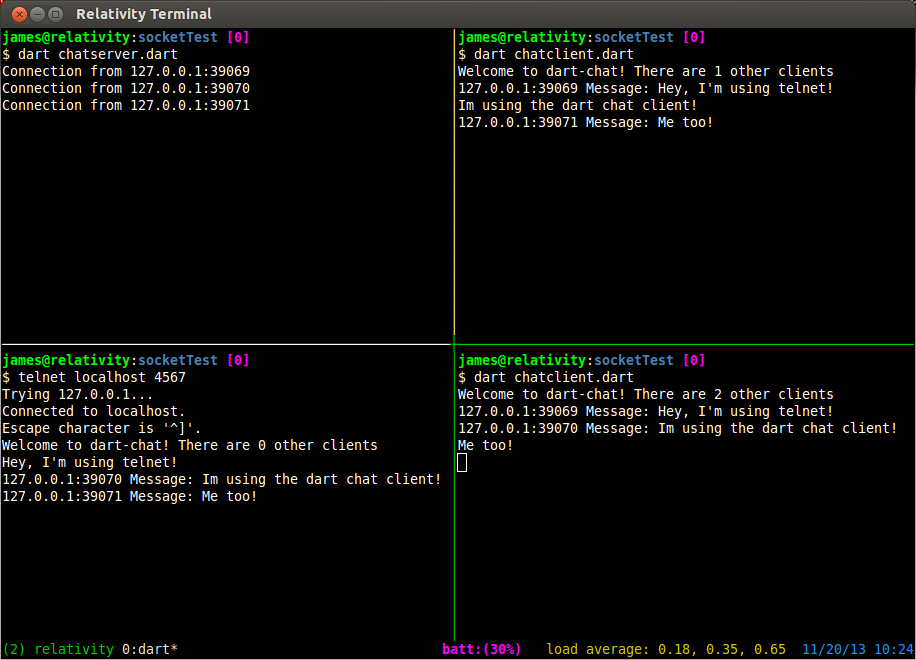

Simple chat room server project

Now that we got our feet wet with some sample programs, it’s time to step it up and write some cool code. Lets write a simple chat room server. The server will accept connections from clients and add them to the room. When a client sends a message to the room, all other connected clients will receive it. It should also gracefully handle errors and disconnections.

We can build off of our serverexample.dart program. The first problem to tackle is how to know which client is sending a message. When a callback is called, there is no way to know which socket called it. To get around this issue we will make a ChatClient class that will wrap the client socket object and provide the callback functions.

...

...

class ChatClient {

Socket _socket;

String _address;

int _port;

ChatClient(Socket s){

_socket = s;

_address = _socket.remoteAddress.address;

_port = _socket.remotePort;

_socket.listen(messageHandler,

onError: errorHandler,

onDone: finishedHandler);

}

void messageHandler(List data){

String message = new String.fromCharCodes(data).trim();

distributeMessage(this, '${_address}:${_port} Message: $message');

}

void errorHandler(error){

print('${_address}:${_port} Error: $error');

removeClient(this);

_socket.close();

}

void finishedHandler() {

print('${_address}:${_port} Disconnected');

removeClient(this);

_socket.close();

}

void write(String message){

_socket.write(message);

}

}

...

...

This class is simple but necessary. The constructor takes in a Socket object which it will hold internally. It also provides the onData(), onError() and onDone() callback functions that will be used by the socket. The messageHandler() function will read a message from the client and distribute it to the other clients. Lets take a look at the distributeMessage(ChatClient client, String message) function to see how it works.

...

List<ChatClient> clients = [];

void distributeMessage(ChatClient client, String message){

for (ChatClient c in clients) {

if (c != client){

c.write(message + "\n");

}

}

}

...

The distributeMesssage() function will iterate though the list of connected clients and forward the received message to them all. The clients list acts as our chat room. When a client connects they are added to the room by the handleConnection(Socket client) function which is registered as a callback to the server socket.

ServerSocket server;

void main() {

ServerSocket.bind(InternetAddress.ANY_IP_V4, 4567)

.then((ServerSocket socket) {

server = socket;

server.listen((client) {

handleConnection(client);

});

});

}

void handleConnection(Socket client){

print('Connection from '

'${client.remoteAddress.address}:${client.remotePort}');

clients.add(new ChatClient(client));

client.write("Welcome to dart-chat! "

"There are ${clients.length - 1} other clients\n");

}

Finally when a client disconnects from the chat server, the ChatClient object will remove itself from the client list by calling removeClient(ChatClient client). After it has removed itself from the room it closes the socket.

void removeClient(ChatClient client){

clients.remove(client);

}

You can download the full chat server source code here. To run it simply extract the files and run

$ dart chatserver.dart

To test it out can you use telnet again.

$ telnet localhost 4567

Chat room client

To wrap up this installment let’s implement our own chat room client instead of having to rely on other programs. The client is much simpler then the server. It simply needs to connect to the server, have a way to receive messages, and a way to read messages from the user and send them to the server. As we saw in previous examples, connecting to the server and sending it a message is a snap, so the only new part is reading from the user. To do this we can use the Stdin class. dart:io has a global instance of Stdin open called stdin, so all we need to do is register the onData() call back with it.

import 'dart:io';

Socket socket;

void main() {

Socket.connect("localhost", 4567)

.then((Socket sock) {

socket = sock;

socket.listen(dataHandler,

onError: errorHandler,

onDone: doneHandler,

cancelOnError: false);

})

.catchError((AsyncError e) {

print("Unable to connect: $e");

exit(1);

});

//Connect standard in to the socket

stdin.listen((data) =>

socket.write(

new String.fromCharCodes(data).trim() + '\n'));

}

void dataHandler(data){

print(new String.fromCharCodes(data).trim());

}

void errorHandler(error, StackTrace trace){

print(error);

}

void doneHandler(){

socket.destroy();

exit(0);

}

To use this client simply run the command

$ dart chatclient.dart

after you have started the server.

Try running multiple instances of the client and typing in each of them. You will see the messages getting passed around to the other clients. What’s nice is that it doesn’t matter if the clients are using telnet or the custom chatclient.dart program because of the simple text based nature of the server. You can also use the client to connect over a network or the internet by putting the correct IP into the Socket.connect(host, int port) method.

Please feel free to expand on the server and client programs! Some things you might want to consider adding are user names, private messaging different users, file sharing, and multiple rooms. Have fun with it! If you have any questions please leave me a comment below.

“Testing Memory I/O Bandwidth”

2013-10-16

Very frequently at my company, we find ourselves pushing our hardware to its limit. Usually we are able to dig in and find some optimizations that we may have missed before to squeeze some extra performance out of our products. This time started out a little different though. The issue we were seeing did not seem to be caused by CPU speed or memory capacity, but I/O bandwidth.

In an attempt to quadruple the output of one of our products, we hit a hard wall when running at the peak stress level. The developer on the project Stephen and I began brain storming on what the issue might be and why we were hitting a cap. All of the specs on paper seem to indicate we had more then enough machine to get the job done.

In order to start diagnosing our problem, we looked to a program called mbw which is a memory bandwidth benchmark tool. We installed it from the Ubuntu repository using sudo apt-get install mbw. As we found out later, this installed version 1.1.1 of this software (and yes this is important… keep reading). Running the software is easy. The simplest option is to just pass in an array size (in MB). For brevity I am only showing the average results, instead of all results.

$ mbw 32 | grep AVG AVG Method: MEMCPY Elapsed: 0.00600 MiB: 32.00000 Copy: 5332.889 MiB/s AVG Method: DUMB Elapsed: 0.00422 MiB: 32.00000 Copy: 7589.413 MiB/s AVG Method: MCBLOCK Elapsed: 0.00164 MiB: 32.00000 Copy: 19465.904 MiB/s

Another useful option is to specify the size of the “block” to use in the MCBLOCK test. To specify this option you can use the -b flag.

$ mbw -b 4096 32 | grep AVG AVG Method: MEMCPY Elapsed: 0.00589 MiB: 32.00000 Copy: 5428.421 MiB/s AVG Method: DUMB Elapsed: 0.00421 MiB: 32.00000 Copy: 7598.062 MiB/s AVG Method: MCBLOCK Elapsed: 0.00064 MiB: 32.00000 Copy: 50172.468 MiB/s

Woah! Hold the phone! Do you notice something about these results? Why is the MCBLOCK test a whole order of magnitude faster then the MEMCPY test? This made Stephen and my jaws drop. What was being done to get this much throughput? This is where the story really begins.

This first thing we did was grab a copy of the source. The first source that we found was for version 1.2.2 (At this time we didn’t know it was different then the version we had installed). We started digging through the source and found the worker function that performed the three tests.

if(type==1) { /* memcpy test */

/* timer starts */

gettimeofday(&starttime, NULL);

memcpy(b, a, array_bytes);

/* timer stops */

gettimeofday(&endtime, NULL);

}

else if(type==2) { /* memcpy block test */

gettimeofday(&starttime, NULL);

for(t=0; t<array_bytes; t+=block_size) {

b=mempcpy(b, a, block_size);

}

if(t>array_bytes) {

b=mempcpy(b, a, t-array_bytes);

}

gettimeofday(&endtime, NULL);

}

else { /* dumb test */

gettimeofday(&starttime, NULL);

for(t=0; t<asize; t++) {

b[t]=a[t];

}

gettimeofday(&endtime, NULL);

}

This is the code snippet from worker() in mbw.c on line 92. The first thing we discovered that that the MCBLOCK test was using the mempcpy() function. I had never used the mempcpy function before so I was intrigued! Of course the mystery only deepened when we looked at the mempcpy man page.

The mempcpy() function is nearly identical to the memcpy(3) function. It copies n bytes from the object beginning at src into the object pointed to by dest. But instead of returning the value of dest it returns a pointer to the byte following the last written byte.

mempcpy(3) man page

They really weren’t kidding with the “nearly identical” part either. As soon as I dug into the glibc source code, it became very apparent something strange was going on.

void *

__mempcpy (void *dest, const void *src, size_t len)

{

return memcpy (dest, src, len) + len;

}

libc_hidden_def (__mempcpy)

weak_alias (__mempcpy, mempcpy)

libc_hidden_builtin_def (mempcpy)

So why was the mempcpy() code running so much faster than the memcpy() code if one is simply calling the other? The answer would soon surface! The next thing we did is compile the 1.2.2 source that we downloaded and ran it. To our amazement we were getting much lower bandwidth for what seemed like no reason.

$ ./mbw-1.2.2 -b 4096 8 | grep AVG AVG Method: MEMCPY Elapsed: 0.00292 MiB: 8.00000 Copy: 2743.861 MiB/s AVG Method: DUMB Elapsed: 0.00116 MiB: 8.00000 Copy: 6871.081 MiB/s AVG Method: MCBLOCK Elapsed: 0.00098 MiB: 8.00000 Copy: 8145.810 MiB/s

We didn’t understand, we didn’t change anything, we simply compiled and executed the code, and yet the Ubuntu package version was reporting huge bandwidths, and this version was not. I started to suspect that the version in the repo was different somehow, and I was right! We ran apt-get source mbw, and sure enough we got version 1.1.1. Running a diff between these two files showed that the MCBLOCK test was updated.

/* in version 1.1.1 */

for(t=0; t<array_bytes; t+=block_size) {

c=mempcpy(b,a,block_size);

}

/* in version 1.2.2 */

for(t=0; t<array_bytes; t+=block_size) {

b=mempcpy(b,a,block_size);

}

Well, that solves that mystery! The issue was that, in version 1.1.1 (installed by apt-get) , the program was writing the same block_size chunk of memory over and over causing heavy cache hits and speed up. This new version properly advances the destination pointer, thus eliminating the cache hits and lowering the bandwidth measurements.

Now, does anything else stand out about the 1.2.2 code? Well if you guessed that the source pointer was not being advanced, you would be correct! So these numbers were still a bit off. After making the correction we got much more consistent measurements.

/* in the corrected version (now 1.3.0) */

char* aa = (char*)a;

char* bb = (char*)b;

gettimeofday(&starttime, NULL);

for (t=array_bytes; t >= block_size; t-=block_size, aa+=block_size){

bb=mempcpy(bb, aa, block_size);

}

if(t) {

bb=mempcpy(bb, aa, t);

}

gettimeofday(&endtime, NULL);

$ ./mbw-1.3.0 -b 4096 8 | grep AVG AVG Method: MEMCPY Elapsed: 0.00288 MiB: 8.00000 Copy: 2778.067 MiB/s AVG Method: DUMB Elapsed: 0.00113 MiB: 8.00000 Copy: 7107.952 MiB/s AVG Method: MCBLOCK Elapsed: 0.00166 MiB: 8.00000 Copy: 4817.246 MiB/s

I am happy to report that these changes were merged into the main line release at the raas/mbw github page. So if you are going to use mbw for benchmarking your memory throughput I highly recommend you use the new 1.3.0 version.

If you have a multi-cpu system and want to see what your total average throughput is you can use this script below. It will detect how many processors you have and spawn the matching number of mbw instances. It will then sum up the average measurements. Feel free to modify it as needed.

#! /usr/bin/env bash

# This will run an mbw instance for each core on the machine

NUMCORES=$(grep "processor" /proc/cpuinfo | wc -l)

TMP="/tmp/mbw_result_tmp"

echo "Starting test on $NUMCORES cores"

for (( i=0; i<$NUMCORES; i++ )); do

mbw -b 4096 32 -n 100 > ${TMP}_${i} &

done

echo "Waiting for tests to finish"

wait

MEMCPY_RESULTS=()

DUMB_RESULTS=()

MCBLOCK_RESULTS=()

for (( i=0; i<$NUMCORES; i++ )); do

MEMCPY_RESULTS[$i]=`grep -E "AVG.*MEMCPY" ${TMP}_${i} | \

tr "[:blank:]" " " | cut -d " " -f 9`

DUMB_RESULTS[$i]=`grep -E "AVG.*DUMB" ${TMP}_${i} | \

tr "[:blank:]" " " | cut -d " " -f 9`

MCBLOCK_RESULTS[$i]=`grep -E "AVG.*MCBLOCK" ${TMP}_${i} | \

tr "[:blank:]" " " | cut -d " " -f 9`

done

MEMCPY_SUM=0

DUMB_SUM=0

MCBLOCK_SUM=0

# Need to use `bc` because of floating point numbers

for (( i=0; i<$NUMCORES; i++ )); do

MEMCPY_SUM=`echo "$MEMCPY_SUM + ${MEMCPY_RESULTS[$i]}" | bc -q`

DUMB_SUM=`echo "$DUMB_SUM + ${DUMB_RESULTS[$i]}" | bc -q`

MCBLOCK_SUM=`echo "$MCBLOCK_SUM + ${MCBLOCK_RESULTS[$i]}" | bc -q`

done

echo "MEMCPY Total AVG: $MEMCPY_SUM MiB/s"

echo "DUMB Total AVG: $DUMB_SUM MiB/s"

echo "MCBLOCK Total AVG: $MCBLOCK_SUM MiB/s"

Using the 1.3.0 version of mbw, as well as some good old fashion detective work, we were able to find the perfect combination of software and hardware optimizations to push our product to the next level. It is still in early beta, but hopefully in a few months it will be finalized and I can release more details!

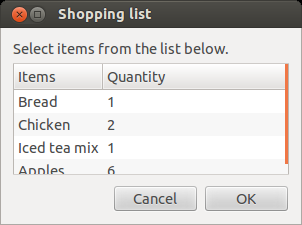

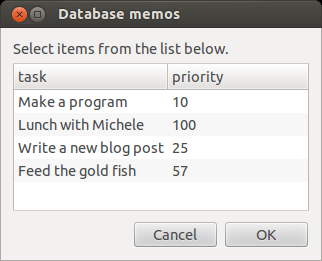

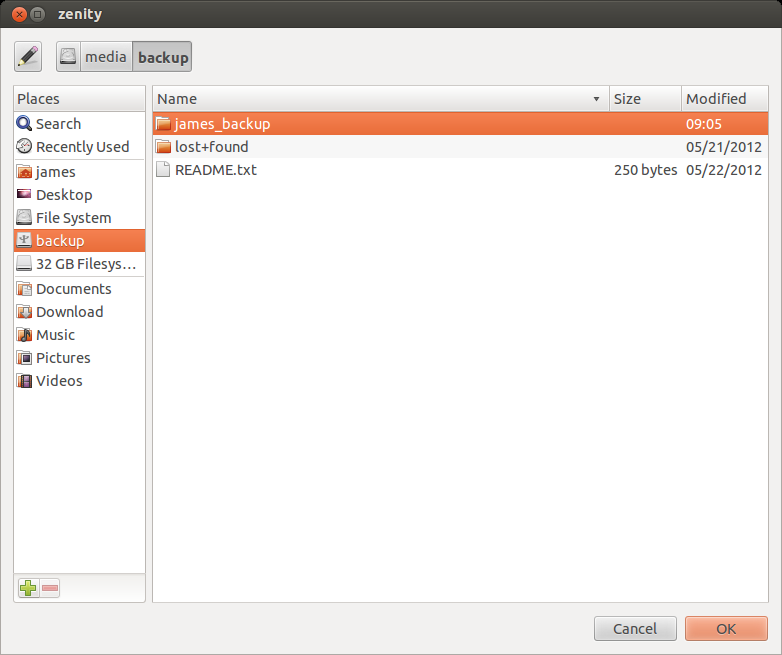

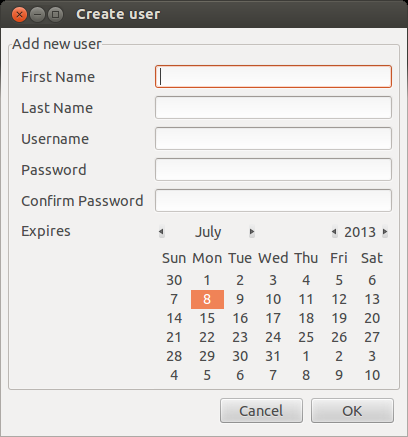

In Part 1 of using Zenity we covered some pretty cool GUI components that can be used from a shell script. This time we will be finishing up with some examples of how to integrate some more complex gtk+ widgets into your script.

One of the most fundamental outputs any long running script should produce is some form of progress. Everyone loves a well tuned, accurate progress bar! Conversely, everyone hates a progress bar that goes to 99% immediately and sits there for 10 minutes. Getting a progress bar perfect is an art in and of itself, but that’s not really the point of this tutorial. I’m just going to show you how a Zenity progress bar works.

The Zenity progress bar is activated through the --progress flag. The progress bar is different from the other components because it actively listens on standard in for progress and text to display. Any text you want displayed above the progress bar must begin with a ’#’ character. Any number that is written to standard out is assumed to be the progress. As you can see in the example below, before I copy a file, I echo the file name with a ’#’ character before it. Then I perform the copy operation, and update the percentage. The output of the entire for loop is piped to the progress bar.

#! /usr/bin/env bash

##

# This script will copy all of the specified files to the destination

# usage ./copy.sh file1 file2 ... fileN destination/

#

numberOfArgs=$#

if [ $numberOfArgs -le 0 ]; then

echo "Usage: ./copy.sh file1 file2 ... fileN destination/" >&2

exit 1

elif [ $numberOfArgs -le 1 ]; then

echo "You must specify a destination" >&2

exit 1

fi

destination=${@: -1}

for (( i=1; i<$numberOfArgs; i++ )); do

echo "# ${1}"

cp "${1}" "$destination"

echo "$(( (i * 100) /numberOfArgs ))"

sleep 0.5 #So that you can see the items being copied

shift 1

done | zenity --progress --title="Copy files to $destination" --percentage=0

if [ "$?" -eq 1 ]; then